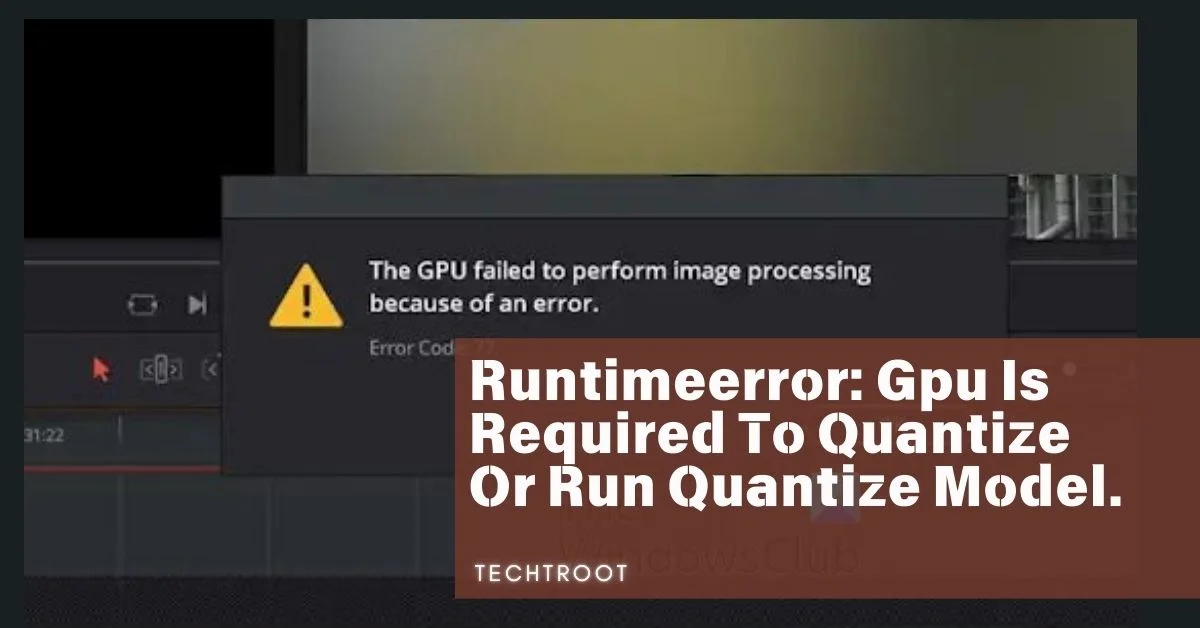

When working with deep learning frameworks like PyTorch, encountering the issue where the torch is not able to use GPU can be frustrating. I can totally understand, it happened to me several times:)

This problem usually happens when the system can’t find or use the GPU, which makes the processing slower and less efficient.

Hi! It’s Jack and I’ll let you know about this error in detail. So, let’s get into it Steadily!

What kind of problem is torch not able to use GPU?

The problem of torch not being able to use GPU is typically rooted in either software configuration issues or hardware incompatibilities.

This issue can show up in different ways. For example, PyTorch might not recognize your GPU at all, or it might detect the GPU but not use it during training or testing. Sometimes, the problem could be caused by missing or outdated CUDA drivers, incorrect PyTorch installation, or hardware that isn’t supported.

What happens when torch is not able to use gpu?

When this happens, it seriously affects how well your machine-learning models perform. The GPU is built to handle many calculations at once and process large amounts of data quickly. Without it, your models will run on the CPU, which means slower training times, less efficiency, and sometimes even the inability to handle more complex models.

Having worked in this field for years, I’ve encountered these issues often. Let me guide you through the details and solutions to get your GPU working smoothly with PyTorch.

How To Resolve This “torch is not able to use gpu” problem? – 6 Proven Steps!

1. Verify GPU Availability:

Start by checking if your system’s GPU is recognized by PyTorch. You can do this by running torch.cuda.is_available() in your Python environment. If it returns False, it means that PyTorch is not detecting your GPU.

2. Check CUDA Installation:

Ensure that the correct version of CUDA is installed and properly configured on your system. Compatibility between CUDA, your GPU, and the version of PyTorch you are using is critical. Reinstalling or updating CUDA may resolve the issue.

3. Update PyTorch:

Sometimes, the issue is due to a bug in the version of PyTorch you are using. Make sure you are using the latest version of PyTorch, which may include fixes for GPU-related issues. Also Check our guide over RuntimeError: Torch Is Not Able to Use GPU; Add –skip-torch-cuda-test to commandline_args Variable to Disable This Check

4. Install GPU Drivers:

Make sure that the GPU drivers are up-to-date. Outdated or missing drivers are a common cause of GPU detection issues. Visit the GPU manufacturer’s website to download the latest drivers.

5. Reinstall PyTorch with CUDA Support:

If the above steps do not work, consider reinstalling PyTorch with CUDA support explicitly. Use the official PyTorch website to find the correct installation command for your environment.

6. Test with a Simple Model:

After performing the above steps, test if the GPU is being utilized by running a simple model in PyTorch. If the GPU is still not being used, additional troubleshooting may be required.

What are the potential Reasons of “torch is not able to use gpu”

Incompatible Hardware: Your GPU might be too old or not supported by the version of CUDA and PyTorch you are using.

Incorrect Installation: PyTorch may not have been installed with CUDA support. This can happen if the wrong installation command was used or if CUDA was not installed correctly.

Outdated Drivers: GPU drivers need to be up-to-date to work correctly with PyTorch. Outdated drivers can cause compatibility issues, preventing PyTorch from using the GPU.

Conflicting Software: Other software on your system might conflict with PyTorch’s ability to use the GPU. This could include different versions of CUDA, conflicting libraries, or even other deep learning frameworks.

Environment Variables: Incorrectly configured environment variables can lead to PyTorch not recognizing the GPU. Ensuring that variables like CUDA_HOME are correctly set is essential.

What Happened To Gpu Temperature When “Torch Is Not Able To Use Gpu” Error Happened?

When you encounter the “torch is not able to use GPU” error, the GPU temperature generally remains low or unchanged. This is because the GPU is not being actively used for processing tasks.

Here’s what happens:

- Low Activity: GPU temperature stays low as there’s minimal activity.

- No Load: GPU doesn’t heat up because it’s not processing tasks.

- Cooling Impact: The cooling system doesn’t need to work hard, keeping temperatures stable.

In summary, if you’re seeing low GPU temperatures when PyTorch is not able to use the GPU, it’s usually a sign that the GPU isn’t being engaged as it should be.

Frequently Asked Questions:

Does PyTorch automatically use the GPU?

Yes, PyTorch automatically uses the GPU if it is available and correctly configured. However, you need to ensure that your code is written to utilize the GPU by using .cuda() methods on tensors and models.

How do I check if PyTorch is using the GPU?

You can check if PyTorch is using the GPU by running torch.cuda.is_available(). If it returns True, the GPU is available, and you can proceed to move your model and data to the GPU.

What should I do if my GPU is not being detected?

If your GPU is not being detected, ensure that CUDA is properly installed, and your GPU drivers are up-to-date. You may also need to reinstall PyTorch with CUDA support.

To Sum Up:

The issue of torch not being able to use GPU can be a significant roadblock in deep learning tasks, but with the right steps, it can be resolved.

By ensuring that your system’s hardware and software are compatible, and by properly configuring PyTorch and CUDA, you can restore GPU functionality and enhance the performance of your models.

Addressing these issues not only improves the efficiency of your work but also allows you to tackle more complex and resource-intensive tasks with confidence.